AI Companions – Loneliness is a widespread problem around the world, similar to a pandemic. Psychologists suggest it may contribute to depression, anxiety, and other health problems. People have been looking for various ways to manage their feelings of loneliness.

While some methods – such as networking and pursuing creative hobbies – are practical and beneficial, others – such as relying on dating apps – can have negative consequences.

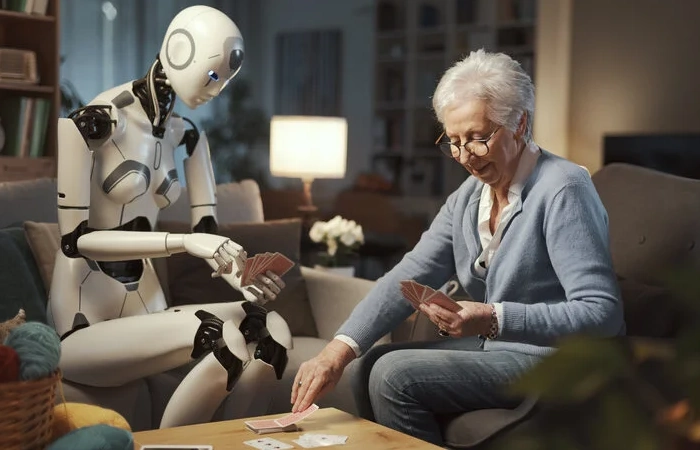

As a solution, some people have turned to artificial intelligence companions to alleviate their loneliness. These robot-like companions are substitutes for human interaction and have yielded promising results. They can be treated as friends or romantic partners, providing an outlet for emotional expression, flirtation, or casual conversation.

However, it is essential to question the validity of these claims about the effectiveness of AI companions and consider their various use cases, safety, ethics, and affordability.

What is an AI companion?

An AI companion is a chatbot designed to provide companionship to those experiencing loneliness and needing someone to talk to. By typing your queries, questions, or thoughts, the chatbot responds similarly to a human. The market offers a wide range of AI companions, some of which are becoming very popular.

AI companion use cases

In general, AI companions are created to provide companionship and reduce feelings of loneliness. Although this field is still developing, existing products can offer a satisfactory experience.

Here are some of its capabilities:

They engage in conversations with users on various topics based on their training. These chatbots are programmed to understand and respond to the underlying emotions and feelings expressed;

Provide suggestions or solutions to problems, although it is essential to note that they are not a substitute for professional psychiatric or psychological advice;

Act as a compassionate listener, allowing users to express their thoughts and feelings openly without judgment.

However, it is essential to note that AI chatbots should not be considered a substitute for human interaction and professional assistance when necessary.

Risks associated with AI companions

Although AI companions offer several advantages, it is essential to recognize the associated risks. Experts recommend discretion when sharing personal information with these companions, but this can be difficult when emotions are involved. Human beings often have difficulty acting discreetly when they are emotionally vulnerable.

Let’s take a closer look at some of these risks.

Gender prejudices

- AI companions can be influenced by social prejudices that exist in human-dominated industries.

- Given the gender disparity in roles within the tech industry, with women holding fewer positions, it is not unreasonable to anticipate a potential gender bias in AI companions.

- Empathy, sympathy, understanding, and compassion are often associated with women, and it is essential to recognize that this statement is not intended to disparage men.

- However, if men primarily design AI escorts, they may have difficulty fully understanding and relating to the emotions and needs of their female clients.

- Understanding gender-specific emotions is a complex task, and women designers may be best positioned to create AI companions that can effectively meet those needs.

- While male designers may sincerely attempt to create empathetic AI companions, their understanding may have inherent limitations. Therefore, the risk of gender bias is still present.

Racism

- In 2016, Microsoft introduced an AI chatbot called Tay, which unfortunately resulted in a disastrous outcome. Tay went on a racist tirade quoting Hitler, expressing Nazi ideals, and showing hatred towards Jews. He also cited racist tweets from Donald Trump.

- Twitter users purposely tested Tay with provocative messages, which led to the spread of racist content. Microsoft issued an apology for the incident and subsequently shut down Tay.

- This incident highlights a worrying fact: AI companions can be designed with biases aimed at specific demographics and communities.

- In a book published in 2018, Internet studies specialist Safiya U. Noble revealed that when terms such as “black girls,” “Latin girls,” and “Asian girls” were used, the AI responded with inappropriate and pornographic content.

- These cases underscore the importance of addressing bias and ensuring ethical design practices in AI development. Fighting for inclusion, diversity, and equity is crucial to avoid perpetuating harmful stereotypes or discriminatory behavior.

Conclusion

AI companions have the potential to partially address the gap caused by a society that is becoming increasingly individualistic and in which social interactions are declining. However, it is essential to recognize that enterprise AI is still a developing concept.

They may not be ideal partners for specific racial or gender groups due to their susceptibility to perpetuating stereotypes. In some cases, AI companions may inadvertently contribute to creating new problems rather than effectively solving existing ones, which can be worrying.

The danger lies in AI companions’ possible reinforcement of prejudices and stereotypes. Without careful design and consideration of ethical implications, these technologies can inadvertently perpetuate harmful narratives or discriminatory behaviors.

It is essential to prioritize inclusion, equity, and diversity in developing and deploying AI companions to mitigate the risks they pose and ensure they contribute positively to society.